The Data Normalization Logic Used In Every Modern Chart Of Liters Four Most Popular Techniques Scientist

Data normalization refers to the process of adjusting values measured on different scales to a common scale This tutorial describes how to normalize a data model. This technique reduces redundancy, improves data integrity, and standardizes information for consistency

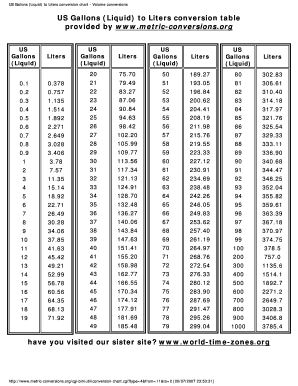

Fillable Online US Gallons (Liquid) to Liters conversion chart - Volume

In databases, analytics, and machine learning, normalization plays a crucial role Database normalization is a series of steps followed to obtain an efficient data model It enhances data quality and analysis by ensuring all features contribute equally and by.

It acts as a set of rules and a system of organization for your data warehouse, ensuring every piece of information is stored logically and efficiently

This guide breaks down 1nf, 2nf, 3nf in simple terms with easy examples Learn how to design efficient, normalized databases that reduce redundancy and improve data integrity, and see when (and why) to normalize or denormalize your data model. Learn everything about normalization in dbms with sql examples—from 1nf to 6nf—to reduce redundancy, ensure data integrity, and improve database performance. Normalization is an important process in database design that helps improve the database's efficiency, consistency, and accuracy

It makes it easier to manage and maintain the data and ensures that the database is adaptable to changing business needs. In many cases, achieving 3nf is sufficient to ensure data integrity while maintaining good query performance These data normalization forms are categorized by tiers, and each rule builds on the one before — that is, you can only apply the second tier of rules if your data meets the first tier of rules, and so on Many types of data normalization forms exist, but here are four of the most common and widely used normal forms that apply to most data sets.

Data normalization what a normalized database looks like and why table structure matters

Data normalization is the process of structuring information in a database to cut down on redundancy and make that database more efficient Think of normalization as a way to make sure that every field and table in your database is organized logically, so that you can avoid data anomalies when inserting. Data normalization is essential for a database to be efficient, accurate, and easily scalable Normalization enhances security and structure

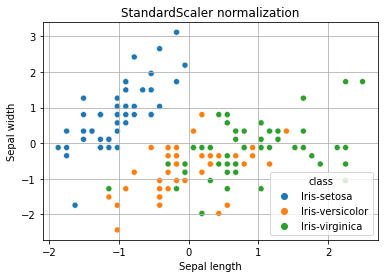

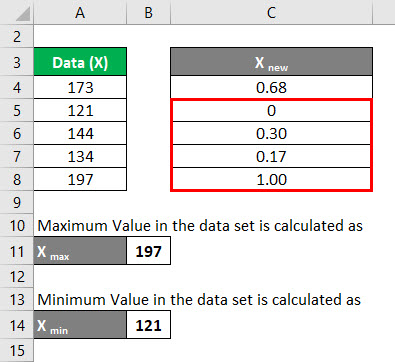

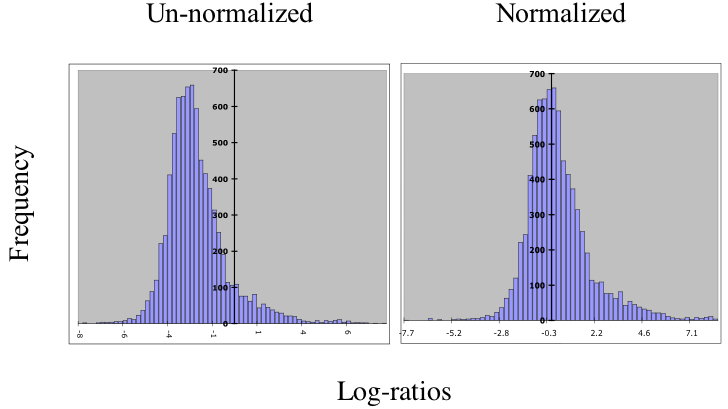

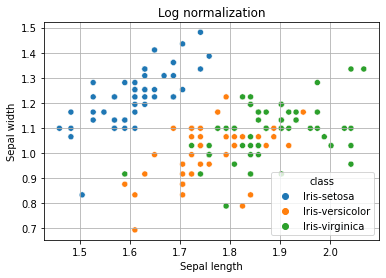

Therefore, it is only appropriate to balance normalization and performance. Normalization and scaling can also improve data visualization By transforming data into a common range, it becomes easier to visualize and compare different features. Data normalization is a preprocessing method that resizes the range of feature values to a specific scale, usually between 0 and 1

It is a feature scaling technique used to transform data into a standard range.

I kept running into the same problem on analytics teams A countplot that looked correct but told a misleading story Raw counts are fine when categories are balanced, but they quietly lie when one category dominates the dataset If you want a fair comparison across multiple categorical variables, you need normalization

In my experience, the […] Unlock the power of normalization in mathematical logic and simplify complex expressions with our ultimate guide. Discover the power of data normalization with our guide and learn about the different types of normalization and explore their examples. Data normalization is an integral part of database design as it organizes information structures to eliminate redundancy and maintain data integrity

This methodical process transforms chaotic data collection into logical arrangement through normal forms which are progressive rules that prevent anomalies through insertion, update, and deletion.

Learn how normalization improves database structure by reducing redundancy and enhancing data integrity Understand the benefits, challenges, and processes like 1nf, 2nf, and 3nf for efficient data management. Whilst every effort has been made to ensure the accuracy of the metric calculators and charts given on this site, we cannot make a guarantee or be held responsible for any errors that have been made. Discover how data normalization transforms disorganized datasets into clean, structured, and reliable assets

Learn the key normal forms, benefits, and practical applications in business, healthcare, and analytics. Data normalization is a fundamental technique in data science, machine learning, and database management It refers to the process of transforming numerical data into a standard range, typically between 0 and 1 (scaling) or around a zero mean with unit standard deviation (standardization). Learn about data normalization, its importance in database design, different normal forms, and best practices for implementation.

Data normalization is the process of organizing data in a database to reduce redundancy and improve data integrity

It involves restructuring your data to eliminate duplicates, standardize formats, and create logical relationships between different data elements. And yet, normalization is little understood and little used